Idag har man hållit en förberedande domstolsförhandling med anledning av att USA överklagat en brittsik domstols beslut om att inte utlämna Assange. (Läs mer här.)

Den amerikanska administrationen tycks ha haft viss framgång med att hävda vissa (men inte alla) sina grunder för att ett överklagande skall tillåtas.

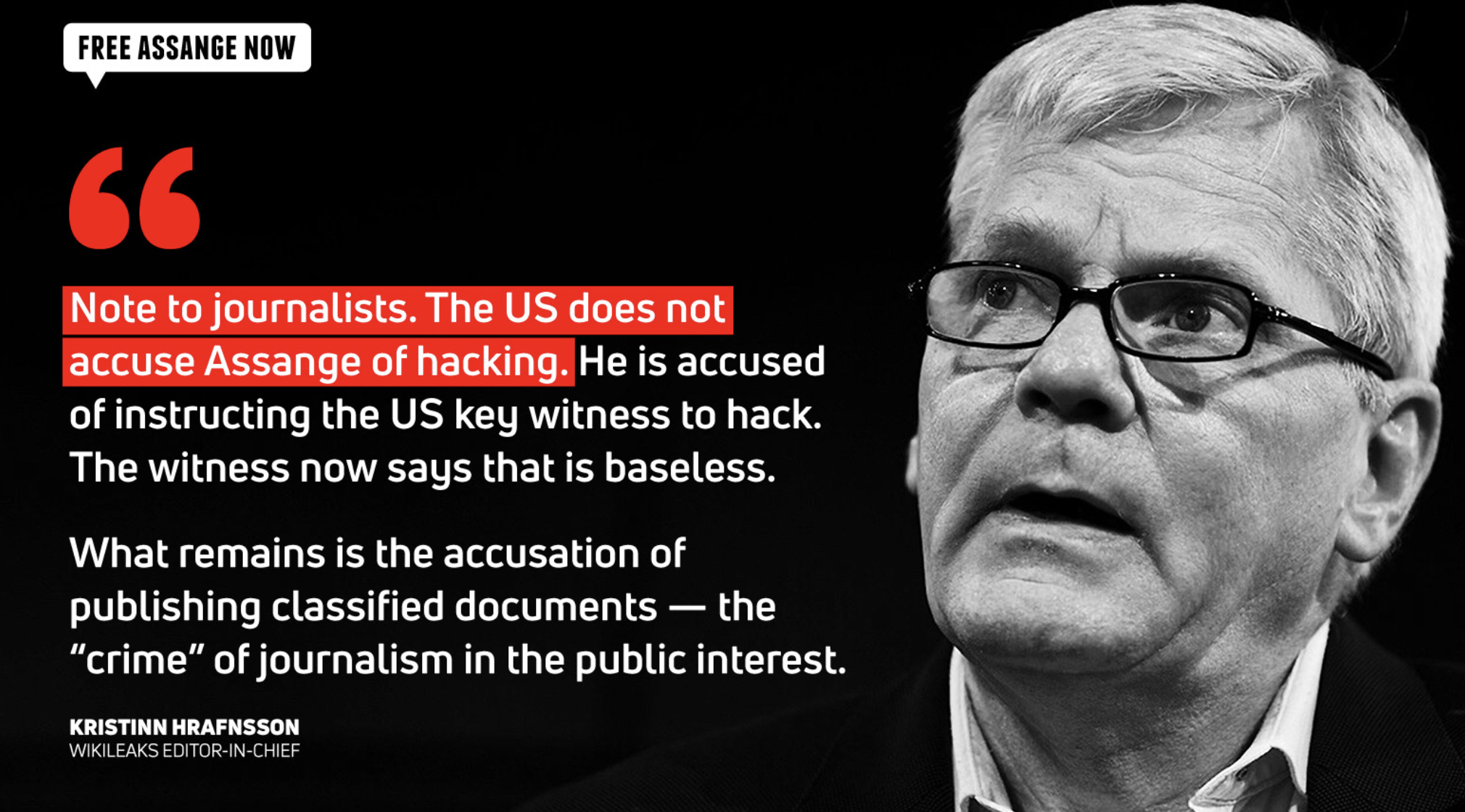

Detta behöver dock inte innebära att det amerikanska åtalet mot Wikileaks grundare stärks som sådant. Speciellt inte som den amerikanska administrationens nyckelvittne tagit tillbaka sina uppgifter.

Datum för nästa domstolsförhandling är satt till 27-28 oktober.

Läs en mer utförlig beskrivning från rätten här »